Hi,

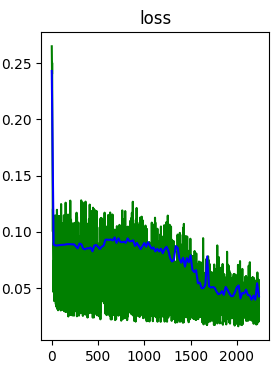

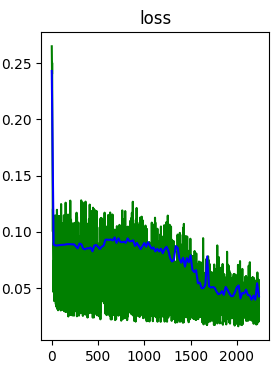

When I am training a quantum neural net, I got the following loss function and I can not inteprete it properly.

I guess the landscape could be like a pleatau with the minimum being a very deep hole so it causes such oscillatory error but I am not sure. Are there any suggestions/advice/suspects that you could provide?

Thanks

Hey @Daniel_Wang!

I assume we’re talking about the green line? If so, you’ll want to try an adaptive optimization method, like Adam. Hyperparameters are adjusted during training to try to hone in on the minimum value better.

Lets start there and see what happens! Let me know if that helps.

Hi,

Thank you for pointing that out. Yes, I am talking about the green line which is the training error. The blue line is the generalization error. Actually I am already using Adam here. Here, I used a learnt quantum embedding proposed by a MLP and a PQC (parametrized circuit) ansatz for processing of the quantum embedding, and I am trying to understand in what way that I should adjust my model to lower the error.

In my case, I used expectation value of Z measurement for each qubit as output, so I think I only need to use Pauli-Y gate to do my ansatz, right? As Pauli X and Pauli Z compared to Pauli Y only provide some phase which would not contribute to probability change… Correct me if I am wrong!

And since I used a learnt embedding from a MLP, probably the case would lie in the ansatz when the qb number is fixed constant…?

Thank you so much!

Hi @Daniel_Wang ,

I’m not sure what the issue is but maybe you can try changing your cost function and try to build local cost functions.

The demo on alleviating barren plateaus with local cost functions can help.

Let me know if it does help!