Hi @eltabre!

When I add the Quantum Keras Layer It has 0 params and is unused. How can I fix that?

This can be fixed by doing a forward pass through the model before printing the summary, e.g., like so:

model.add(qlayer)

model.add(clayer)

model(x_test[:2])

model.summary()

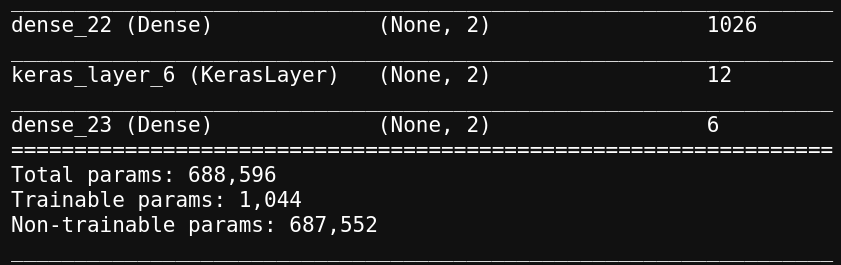

The summary then shows the Keras layer parameters properly loaded. I’m not sure why you see

(unused) without the forward pass, perhaps KerasLayer isn’t correctly overriding a required method As a paranoia check, you can confirm that the model is sensitive to the Keras layer parameters by calculating the gradient:

with tf.GradientTape() as tape:

out = model(x_test[:2])

tape.jacobian(out, qlayer.trainable_weights)